Comparative Analysis of Affective Computing Databases

Introduction

Affective computing has gained significant attention in recent years as the field that focuses on the integration of emotional intelligence into computer systems and applications. The ability for machines to understand, interpret, and respond to human emotions opens up new possibilities for a wide range of industries, from healthcare and customer service to entertainment and education. At the core of affective computing lies a rich set of databases that provide the necessary information to train and develop these systems.

In this comparative analysis, we will examine the top 10 most popular databases.

Each of these databases offers unique features and characteristics, and understanding their strengths and limitations is crucial for researchers and developers in the field.

1. AFEW (Acted Facial Expressions in the Wild)

Link: AFEW Database

Pros:

Contains spontaneous facial expressions captured in naturalistic settings.

Offers a significant number of annotated videos for emotion recognition algorithm development.

Suitable for addressing challenges related to spontaneous emotional responses in real-world scenarios.

Cons:

Primarily focused on facial expressions, lacking data in other modalities.

Limited diversity in terms of capturing emotions within specific contexts.

2. MAHNOB-HCI (Multimodal Affective Human-Computer Interaction)

Link: MAHNOB-HCI Database

Pros:

Contains recordings of multimodal signals, including facial expressions, voice recordings, physiological signals, and eye gaze data.

Offers extensive and accurate annotations for emotion recognition.

Suitable for studying various affective states and their interactions.

Cons:

Smaller sample size, which may limit generalizability.

Predominantly focused on adult participants, potentially lacking diversity.

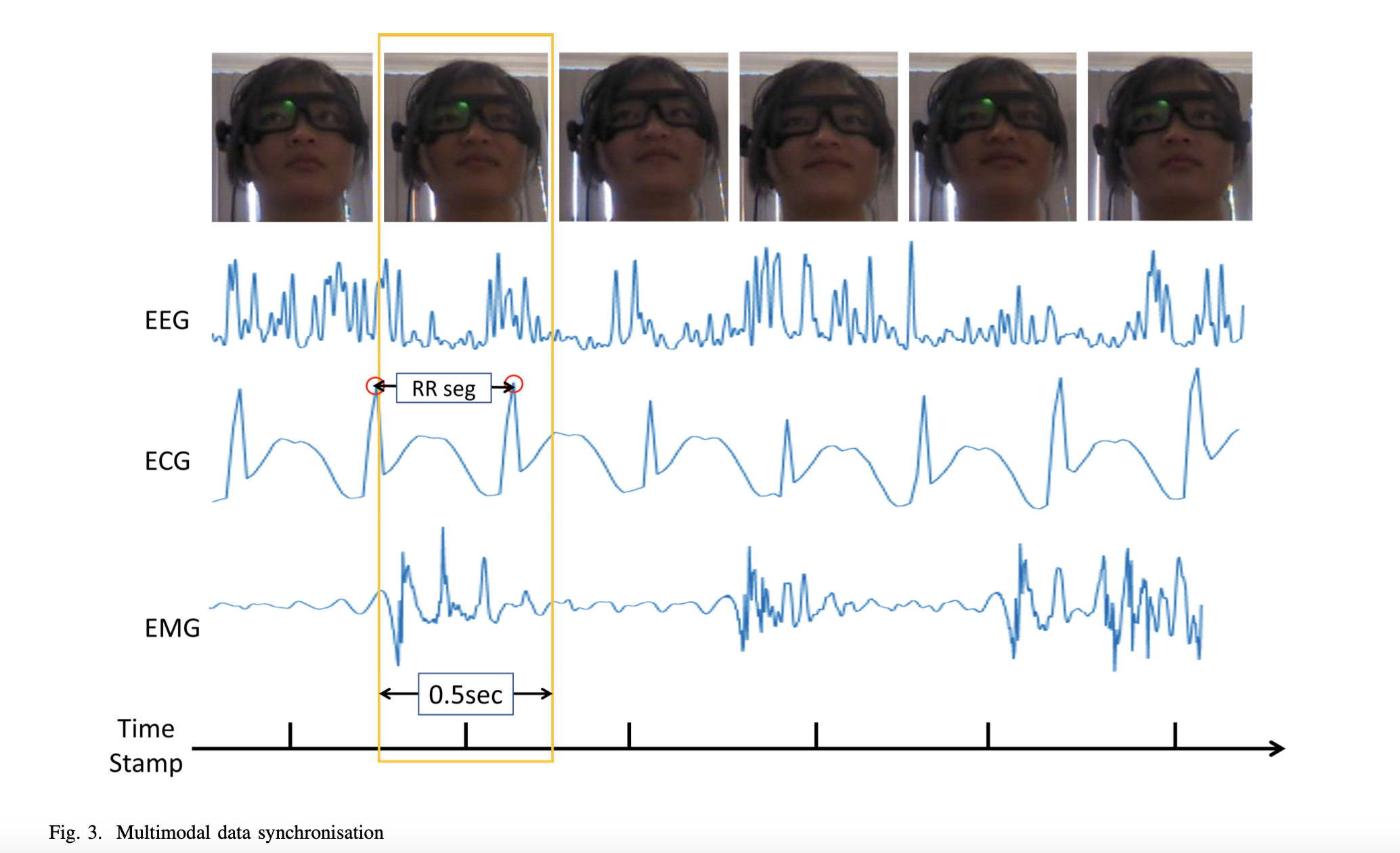

Figure showing multimodal data synchronisation for multimodal affective computing databases.

3. LIRIS-ACCEDE (Lyon Institute of Research in Computer Science and Applications for Development)

Link: LIRIS-ACCEDE Database

Pros:

Focuses on the analysis of multimedia content, such as movie snippets, for studying emotional responses.

Diverse range of stimuli, including different movie genres and emotional scenarios.

Suitable for examining emotions within various contexts.

Cons:

May not capture longer-term emotional dynamics effectively due to the use of movie snippets.

Limited granularity for facial expression recognition compared to databases focused on facial expressions.

4. SyntAct (Syntactic and semantic modeling of affect-laden dialogues)

Link: SyntAct Database

Pros:

Immerses users in controlled VR environments for capturing emotional responses with ecological validity.

Offers detailed annotations, enabling in-depth analysis of emotions.

Suitable for research on affective computing in dialogues and virtual environments.

Cons:

A relatively new database, potentially offering fewer data options for specific analyses.

May not have the same diversity and quantity as more established resources.

Figure showing emotions “Fear” and “Disgust” and how they are annotated and collected in multimodal affective computing databases that are available to the general public.

5. MGEED (Multimodal Grand Emotion Expression and Detection)

Link: MGEED Database

Pros:

Comprehensive collection of multimodal data, including facial expressions, body gestures, speech attributes, and physiological signals.

Robust sample size and diversity with a focus on a diverse participant pool.

Extensive annotations across multiple modalities, allowing for comprehensive analysis of emotional data.

Cons:

Limited Sample Size: Although considered large, the sample size in MGEED is still finite and may not encompass the full spectrum of human emotional responses. This limitation can affect the generalizability of findings to broader populations.

Potential Cultural Bias: The database may be skewed towards the cultural and demographic characteristics of the participants from the region where the data was collected (IIIT Hyderabad, India). This could introduce cultural biases and limit the applicability of the database to a global context.

Lack of Real-world Scenarios: While MGEED captures a range of multimodal data, it may not fully represent emotions in real-world, unscripted scenarios. Emotions expressed in controlled experiments may differ from those experienced naturally in daily life.

Data Collection Environment: The emotions captured in the database are in a controlled lab environment, which might not fully reflect the complexities of emotions expressed in natural settings or real-world interactions.

Limited Contextual Information: MGEED primarily focuses on capturing emotions without extensive contextual information. Understanding the specific situational or environmental factors influencing emotions could enhance the database's utility.

Annotation Challenges: The detailed annotations in MGEED, while valuable, may not be immune to inter-rater variability, potentially affecting the reliability of the annotations.

Diagram showing the process and steps of creating, validating and sharing an open source Affective Computing database.

6. CK+ (Extended Cohn-Kanade)

Link: CK+ Database

Pros:

Primarily focused on capturing facial expressions, beneficial for developing facial expression recognition models.

Offers annotated facial expression data, suitable for training and evaluating facial emotion recognition algorithms.

Cons:

Limited in terms of multimodal data, focusing mainly on facial expressions.

Lacks the richness and depth of information provided by databases like MGEED.

Biggest Pro and Con of each database listed.

7. FIJA (Facial, Interactions and Affective Domains):

Link: FIJA Database

Pros:

Emphasizes dyadic interactions, encompassing various affective states observed during social interactions.

Incorporates multimodal data, providing a more comprehensive view of affective dynamics.

Offers annotations that include both self-reported affective states and observer ratings, increasing data richness.

Cons:

Limited Generalizability: The focus on dyadic interactions may limit the generalizability of findings to other social interaction scenarios.

Complexity of Data: The inclusion of self-reported affective states and observer ratings can introduce subjectivity and potential biases into the dataset.

Smaller Dataset: FIJA may have a smaller dataset compared to some other databases, limiting the diversity of interactions and emotions captured.

8. SEMAINE (Sustained Emotionally colored Machine-human Interaction using Nonverbal Expression):

Link: SEMAINE Database

Pros:

Designed for studying emotionally grounded machine-human interactions.

Incorporates multiple modalities, particularly focusing on nonverbal channels of communication like facial expressions and speech.

Useful for research in affective computing related to human-robot or human-computer interaction scenarios.

Cons:

Potential Complexity: The inclusion of multiple modalities and nonverbal channels of communication can increase the complexity of data analysis, requiring advanced processing techniques.

Limited Real-World Scenarios: SEMAINE is designed for emotionally grounded machine-human interactions, which may not fully represent the complexity of real-world human-human interactions.

Limited Data Size: The database size might be smaller compared to more established datasets, potentially restricting the range of applications and analyses.

9. RECOLA (Remote and Co-located Affective Interactions):

Link: RECOLA Database

Pros:

Concentrates on real-time affect recognition in both co-located and remote interactions, allowing for diverse applications.

Captures multimodal signals such as audio, visual, and physiological data, enabling a comprehensive analysis of affective interactions.

Cons:

Limited Annotational Detail: While it captures various modalities, the level of annotation for specific emotional states or expressions may not be as comprehensive as in some other databases.

Potential Challenges in Remote Settings: Capturing affective interactions in remote scenarios may introduce technical challenges and variations in data quality.

Smaller Sample Size: RECOLA may have a smaller sample size compared to larger-scale databases, which can limit the generalizability of results.

10. DEAP (Database for Emotional Analysis using Physiological signals):

Link: DEAP Database

Pros:

Focuses on affective responses captured through physiological signals, including EEG, ECG, and more.

Provides physiological data recordings in response to audiovisual emotional stimuli, facilitating the study of affective computing through biological markers.

Valuable for researchers investigating the connection between emotions and physiological signals.

Cons:

Specialized Focus: DEAP primarily focuses on physiological signals, which may limit its applicability to researchers not specifically interested in the connection between emotions and physiological data.

Limited Multimodality: While it includes physiological data, it lacks data from other modalities like facial expressions, limiting the ability to study holistic affective responses.

Complexity in Data Analysis: The use of physiological signals may require specialized expertise in data analysis, making it less accessible to some researchers.

Conclusion

In conclusion, the comparative analysis of affective computing databases reveals the diverse range of resources available to researchers and developers in this ever-evolving field.

Each of these databases has its strengths and limitations, and researchers and developers must carefully select the most appropriate database based on their specific needs and objectives.

AFEW stands out for its diverse real-world data, while MAHNOB-HCI provides rich multimodal data for human-computer interaction research. LIRIS-ACCEDE offers a large collection of multimedia content for emotion analysis, but its focus on short clips limits its ability to capture long-term emotional dynamics. SyntAct provides an immersive VR environment for understanding emotional cues in dialogues but has a relatively moderate sample size. MGEED offers comprehensive multimodal data for emotion recognition but is limited to specific demographics and potential cultural bias. CK+ is a substantial dataset for facial expression recognition, while FIJA is valuable for studying affective dynamics during social interactions but has a limited sample size. SEMAINE supports research in emotionally grounded machine-human interactions, but with a moderate sample size. RECOLA enables real-time affect recognition but is limited to specific data collection settings, and DEAP is valuable for investigating the connection between emotions and physiological signals, although it has a relatively small sample size.

As the field of affective computing continues to advance, the continuous development of high-quality databases will play a vital role in enabling further progress in this exciting area of research. By understanding and utilizing the strengths of these databases, researchers can continue to expand our knowledge and application of affective computing, leading to innovative and emotionally intelligent systems that enhance various aspects of our lives.

NB: It is important to note that these are not the only affective databases being commonly used. We have also analysed the top 10 open source databases according to Papers with Code(https://paperswithcode.com ) and they are featured below and in the spreadsheet featured.

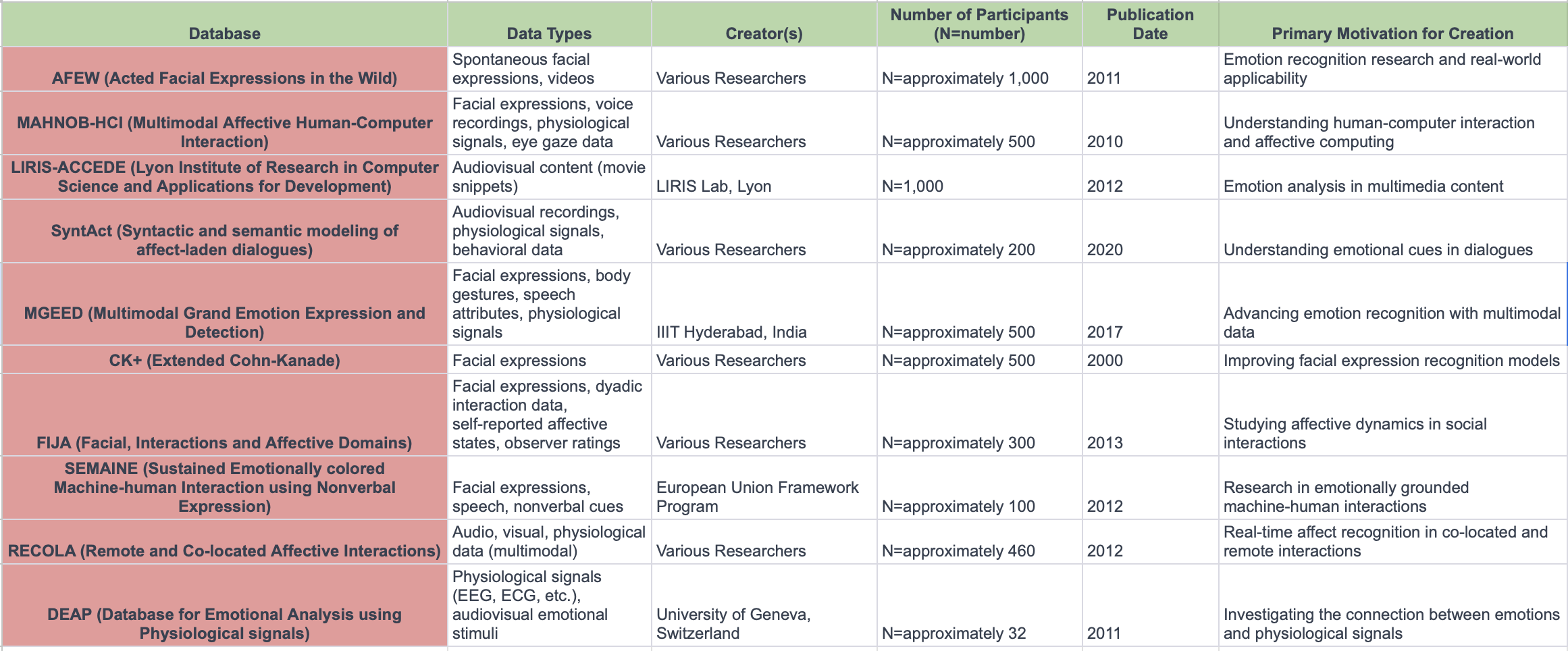

List of databases with listed estimated sample size, year of creation, authors and main use case: